Last year I failed to create retrospective blog post, so lesson learned and I started a little bit earlier now. Not the 1 day, but 3 days before NY :). Retrospective is a great practice and I hope it give me some value when I’ll be reading it next year and laughing on my previous achievements.

I’ll try to cover 4 basic directions: Career, Development, Blog, Personal.

Career

I continue to do my job for e-conomic.com. As I said here e-conomic is the most important thing that happened to me in 2010 and keeps it influence on 2011. I’m still a product developer there, but in general situation has been changing radically. First of all, we are now much more bigger team. Both Ukranian and Denmark parts of team are growing and new cool guys join us. Second, we finished up few projects at the beginning of the year and joined very cool adventure that I a little described here. Sure, I have fun and boring, easy and tough, nice and bad days there. But overall impression is still very good. As soon as we’ll keep same as we are doing, with same level of passion and team atmosphere - we are on right track.

Besides the e-conomic, there is something else that happened to me this year and had direct impact on career - Kiev ALT.NET. Unfortunately, I did not blog to much about that community just a some mention here. Community is very important to any developer and I’m really happy I found the one. I haven’t noticed how I became a speaker, actually. With a few nervous tries on Kiev ALT.NET I’ve managed to give up to 8 (or so) public speeches this year. It might not be so much, but great achievement for me. The speaking opened new opportunities, especially in meeting new people. But first of all it is a great joy and motivation to learn new things.

With a great atmosphere and enthusiastic people inside Kiev ALT.NET I’ve launched another project called Kiev Beer && Code. The idea is taken from Seattle Beer && Code community, but it is nothing more as developers gathering for social coding. The community is very young and to be honest I don’t put to much efforts on it’s promotion, but it a very beginning. I’m very happy with out current Beer && Code team and will be much more happier if new guys are joining.

I became MVB (Most Valuable Blogger) for DZone, that I’m really proud for. It is 2-way value, DZone is using my content.. To me it gives additional traffic. I hope we are still partners for long years.

At the end of the year I tried myself in completely new area - trainings. Thanks to XP Injection training center in Kiev, I’ve been invited to 2 days training session “TDD in .NET”. It appeared so much successful that chief trainer offered me a place in their trainers group. At December 22, I officially joined XP Injection. I’m very excited about that and hope I can do my best there. So far, we’ve planned some further TDD .NET trainings, but definitely TDD won’t be the only one topic I can work in.

Development

I mean, everything that I work on my own: pet projects, self education etc. I don’t remember who said that, but I very agree with this statement - “if you don’t write code at home, you are not progressing”. You have not time to learn at work. Work is the place to perform. You have to have sharp axe, if you came to chop the wood.

My main sharp axe exercise is coding. I try to code as much as I can. For productive coding you have to have some projects. Doesn’t matter what exactly, what’s important is: you like the idea, you have corresponding technological stack. Technological stack have to correspond the area you want to improve in. My main area’s are still: C#, HTML/CSS and JavaScript.

I was reading Pragmatic Programmer this year, with a great advice: “Learn new programming language each year”. I formulated Pragmatic Product Developer advice, just for my self: “Release new product each year”. Even if you release something at work, release something on your own is completely different feeling. It takes too much effort, it’s painful.. But shipment is like drug, you feel very happy as soon you ship.. You fill very bad as you don’t ship for a while.

While ago I write small article there I mentioned what’s my targets and what I working on. Let’s quickly go through it:

trackyt.net as I released that late 2010 I did provide a support up the the May of 2011. A lot of new features has been commited there, but still I slowed down the progress much. It has very low traffic, almost 0 active users. I was about to release version 2.0, absolutely different with all good things that I see in GTD. But I have to admit, I failed that.

elmah.mvc.controller started out as very simple helper for ASP.NET MVC applications that want to use ELMAH, but with a great surprise to me it appeared so popular, that finally wrapped up in a micro product. Now, it has ~2,000 downloads on NuGet, I’ve received several pull requests and had a talk about it on Kiev ALT.NET. Even if is so small, I treat that as success.

github.commits.widget also a micro product that gathered some attention. That was my attempt of working with github API in javascript and I spend maybe 3 hours to create that code, but I know several sites that using that widget. I had joy of creating that and happy that some people find it useful.

githubbadges.com a little application that I wrote to participate 10K competition. Total application zipped content should not exceed 10K. I’ve haven’t won, I haven’t got any mentions.. But again it was fun small project. I used some my knowledge of github API and tried to do very tiny JS and CSS code.

candidate.net is something I started this summer of hackthone. Since then I completely rewrite it, start to use bounce framework inside and had plans to release it October, but failed. I’m not throwing away this project and going to ship that soon. I have yet-another-huge-refactoring cycle now, but after that I hope that alpha version could be ready middle January.

So, it looks like “I did something”.. but really nothing impressive. I try to be a little more focused, not more than 2 projects in parallel + very clear criteria’s of success for projects. But in general my criteria’s are still simple: actually ship it, learn something new, enjoy the ride.

Blog

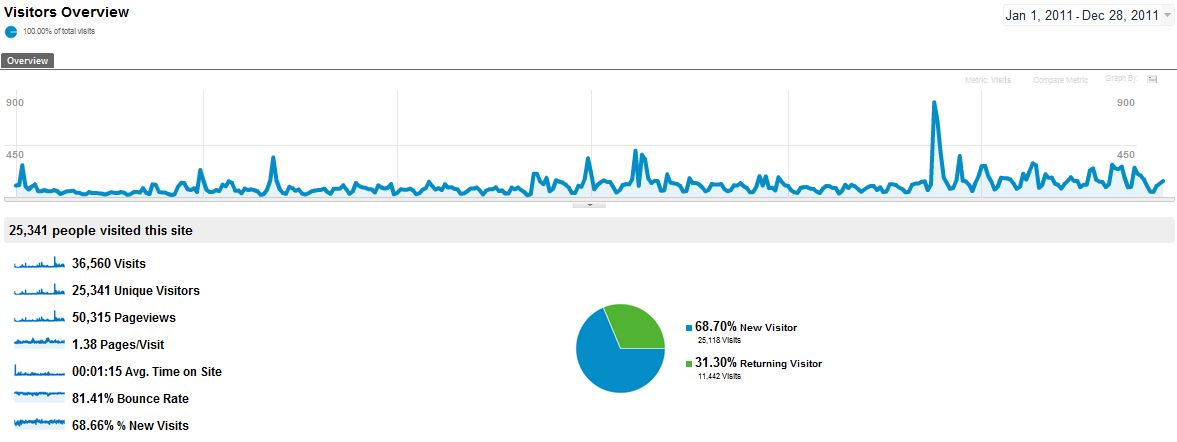

Thanks to google analytics it is very simple to have analysis. Just take a look at those figures:

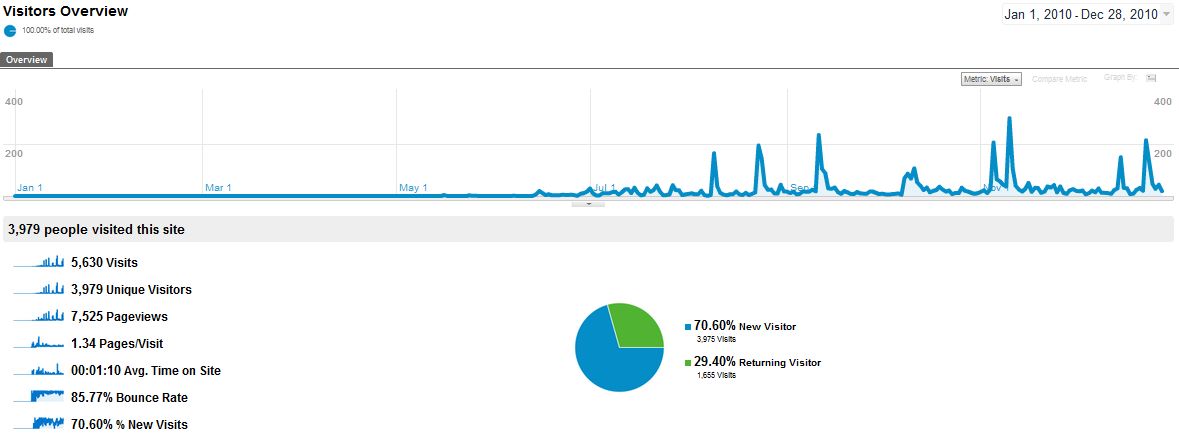

So, I’ve got 25,341 Unique visitors and 36,560 visits in total. To understand what it means to me, let’s take a look last year statistics for same period of time.

In 2010 I got 3,979 Unique visitors. It basically means I improved traffic ~637%. This is actually huge number, I don’t expect that next year of cause.. but hope that traffic will be improved more.

OK, what was most popular content this year:

- Integrating ELMAH to ASP.NET MVC in right way where I first time described my approach of putting ELMAH into ASP.NET MVC website

Implementation of REST service with ASP.NET MVC that I blogged at the very beginning of the year and described my vision on REST services in ASP.NET MVC. With a current experience I would probably change something where, hope to make next popular post.

Inside ASP.NET MVC: IDependencyResolver - Service locator in MVC one of mine articles of Inside ASP.NET series. It actually became on top of http://asp.net website, I was really happy about it.

How to start using Git in SVN-based organization where I shared some experience of “easy-start” with Git even in SVN based company. It worked so nice to me and my teammates, that almost everyone is using git now.

ASP.NET developers disease that I wrote under impressions of one of the job interview we did. My main point where was for the guys that stick to much to WebForms and server-side coding. It was a little provocative, but I hope it was useful.

Personal

First of all, I got married. I strongly believe it is for good and for long.

I did several interesting trips, especially to Val Gardena with my friends at the beginning of the year and Japan. Unfortunately, I don’t predict anything like that in 2012, so it will be kept for good memories.

I have to admit I lowered my sport activities too much. I almost stopped every morning exercises, kyokushin karate, rollerblading. Doing those very occasionally. This is not good at all and I already feel bad influence of that. So, my next year is to make it more balanced.

It was a great year to me. I wish Merry Christmas and Happy New Year to you, dear reader. I’m really looking forward to create new content you would like, new products you would find useful. Let’s gather all good things happened this year and take them for next one.

See you 2012!