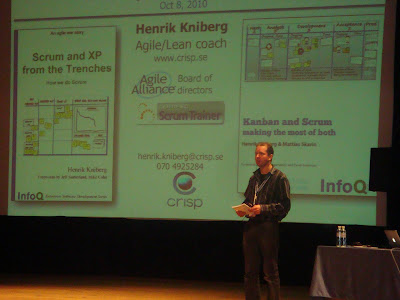

Henrik Kniberg speech: The essence of Agile

Disclamer: text below is compilation of notes I made on Agileee 2010 conference, listening to different speakers. I do it to keep knowledge I got on conference, share it with my colleagues and anyone else who interested. It is only about how I heard, interpret, write down the original speech. It also includes my subjective opinion on some topics. So it could not 100% reflects author opinion and original ideas.

First session on Agileee 2010. Due to its name I thought it will be yet another lecture of Agile/Scrum etc. It was, but Henrik make it really interesting, detailed. I’ve heard a lot of new things there. So far it is my favorite speech on conf.

Beginning

I’m scarred now :) - the words Henrik started. But all his speech were clear and fluent.

Introduction

All current agile abbreviations make us to confuse. Scrum, Kanban, XP, TDD and so on. What is about, what problems it tries to solve?

Doing a software projects could be compared to shooting a goal by cannon ball. There are Goal and Cannon.. and only one shot. After shot we hope, we launch a target. That’s why we try to do all planning, risk management and all other “do before” stuff. But the issue is - target is moving with time, so we always miss.

Taking into account percentage of successful and failed projects through the last years. Henrik gives such figures:

Success of projects:

-

Year 1994 - 15% of success

-

Year 2004 - 34% of success

So, we definitely learned something thought 10 years. So, what was the problems, what we’ve learned so far?

Estimations

Different people, within different context, with slightly different input do different estimations. Henrik gives some really nice examples, than estimation double only by fact that spec is wrote in bigger number of pages, or includes some irrelevant details, or contains different items.

Let’s put it really simple - we are all could not do an estimations! We could actually, but our estimations sucks. A lot of project treated to be failed, as they haven’t been met original estimations.

But they are number of successful projects increases? Because, project gotten a lot smaller and (there is no silver bullet), but Agile is something that improved the situation. Why?

- User involvement

- Executive management support

- Clear business objectives

- Optimizing scope

Agile in nutshell

- Customer discover that they want

- Developers discover how to build

- Responding to change is vital(to track moving goal)

Agile approach to planning

So, if we are not able to do exact estimations and to do exact plans, whats our approach for planning should be?

Agile principles: early delivery. If we late with release we have to at least deliver most important features at planned time.

Releases have to be short. Henrik gives nice metaphor: if we wait for train that goes every 5 minutes, it is not big deal for us if we miss it. We just wait for 5 minutes and go to train. It it goes every 30 minutes, it will annoy us but we still could wait. But let’s consider a ship that goes for another city once in week or once in month. We miss it, we are in trouble.

Having a releases to be short, we act like a 5 minutes train.

Scrum in nutshell

Scrum tries to split the things. Organization split to teams, product split to backlog items, time split to iterations. Deliver ever sprint is key factor.

Scrum defines different roles for people in team, they are:

- Stakeholders: users, helpdesk, customers

- Product Owner: one who define a vision and priorities

- Team: group of people responsible how much to pull in, how to build

- Scrum master: one who responsible for process leadership and coaching

Definition of done, plays important role in Scrum. It have to be clearly defined by team. What exacly have to be done, to treat some particular work is done (tested, merged, release noted and so on).

How to estimate? Don’t estimate time, estimate by people who do work, estimate continuously. Planning poker is a nice agile estimation technique.

Sprint planning, Daily scrum (make a process to be white box, not a black box), something to come out, Demo (looking into product), Retrospective (looking to process).

XP in nutshell

Scrum wraps XP. Scrum don’t know nothing about programming, XP does.. it introduce engineering practices. Scrum + XP is great combination (but is not really required).

XP contains a number of practicies, but most important are - Pair programming: short feedback loop (seconds). Unit tests: short feedback loop (minutes), Continuously integration: short feedback loop (hours).

Agile architecture

It might seem that Agile don’t care about architecture, since there are no value in architecture. It is partially true. Agile is goal oriented, oriented to do things quickly, to release quickly, to fail quickly.

Quick’n’dirty turns to be Slow’n’dirty. If you don’t care on architecture at all and do patch style of programming, you are in trouble after awhile. It would be just too difficult to extend and maintain the product.

On other hand, big upfront design works could make customers unhappy. To much spend on thinking about beautiful architecture with no actual output (remember that Working Software is major Agile metric of progress).

It have to be balanced. Architecture have to be simple, as simple architecture is as simple code is. Clean and simple code is very important. But code is not an asset, code is cost. Only some code is value. Simple code is easy to change.

What is definition of Simple code? Simple code is one that all of there requirements

- All tests passed

- No duplication

- Readable

- Minimal

Sure, such criteria’s as readability and minimalism is subjective, different people could have different opinion on that. But team have to share same vision.

Kanban in nutshell

Kanban is a way of visualization of work. Kanban - visual card. David Anderson, visualized of flow. See board, talk near board.

Board could consist of such sections: Backlog, Next, Dev (Ongoing, Done), Acceptance (Ongoing, Done), In production. It is great to see that Next could be empty. Analysis of board is a way of project management with Kanban. Don’t put to much, don’t pull to much. Printer jam - don’t put more paper. Theory of constraints is applied doing a projects with Kanban.

Comparing methodologies

The comparing have to be done only with one goal - undestanding. It is like compare knife to fork, it doesn’t make a lot of sense to compare tools, decide what tool is best for to solve problem.

Comparison by a number of rules:

- More prescriptive: XP (13), RUP(120+)

- More adaptive: Kanban (3), Do Whatever (0)

- In a middle: Scrum (9)

Approach to use any new methodologies

Henrik gives a really nice explanation of how to approach a thing. It is Shuhari. It cames form Japaneses martial arts, I liked that a lot, since I’m fan of karate :).

- Shu - follow the process (shut up and listen to master)

- Ha - adapt the process, innovate the process

- Ri - never mind the process, forget the process, create your process

Conclusions

At the end of session Henrik did several known (but usually forgotten) priciples to follow.

- Use right tools - chose exactly what you need, it could be difficult, mistakes could happen here

- Don’t be dogmatic - don’t treat any practice as dogma, because it is not dogma, reach Ri level of learning

Perfection is direction, not a place - don’t forget it!