Now, after we are clear what the REST is all about and how to verify REST methods, it is time to implement our own service. I’ll create it just from scratch only reusing some data structures from my other projects and test framework.

Project vision and goal

Assume we are managing blogging service, with a bunch of customers. Customers are pretty happy with service, since they could post new blog posts, collect comments, build social networks etc. But since we already stepped into “API epoch”, customers started to want more.. Namely, they want API to be able to work with data from their own applications. Vendors demand API to create new cool editors for our blog service. CEO wants us to create API, because he’s just found out that applications without API are doomed. Business goal is clear, so let’s implement it. We are going to create REST style API, based on JSON as data exchange format. API would allow users to get all posts, create new and delete some existing post.

Set it up

I’ve created just empty ASP.net MVC2 application in my Visual Studio and added it to github (please don’t be confused by bunch of other folders you see in solution, they are part of UppercuT and RoundhousE framework that I use for all my projects). This application is a host of new REST service. We are going to use functionality of MVC2 framework to implement it.

Initial project content

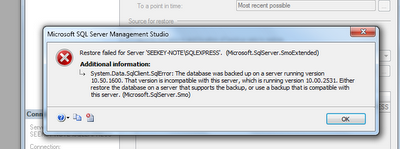

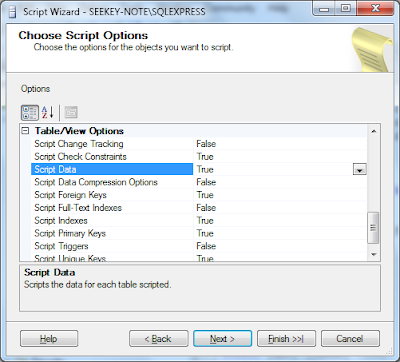

In Model folder of application I added Linq to SQL Classes item and grab BlogPosts table from restexample database to designer, so new RestExampleDataContext class is created and BlogPost entry would be part of it.

[global::System.Data.Linq.Mapping.DatabaseAttribute(Name="restexample")]

public partial class RestExampleDataContext : System.Data.Linq.DataContext

{

// implementation...

[global::System.Data.Linq.Mapping.TableAttribute(Name="dbo.BlogPosts")]

public partial class BlogPost : INotifyPropertyChanging, INotifyPropertyChanged

{

// implementation

I’ve added simple data to database that will be used by tests:

insert into BlogPosts (Url, Title, Body, CreatedDate, CreatedBy)

values ('my-post-1', 'My post 1', 'This is first post', CAST('2011-01-01' as datetime), 'alexander.beletsky');

insert into BlogPosts (Url, Title, Body, CreatedDate, CreatedBy)

values ('my-post-2', 'My post 2', 'This is second post', CAST('2011-01-02' as datetime), 'alexander.beletsky');

insert into BlogPosts (Url, Title, Body, CreatedDate, CreatedBy)

values ('my-post-2', 'My post 3', 'This is third post', CAST('2011-01-03' as datetime), 'alexander.beletsky');

API Interface

The interface we are going to implement, looks like this:

http://localhost/api/v1/posts/get/{posturl}

http://localhost/api/v1/posts/all/{username}

http://localhost/api/v1/posts/post/{username}

http://localhost/api/v1/posts/delete/{username}/{posturl}

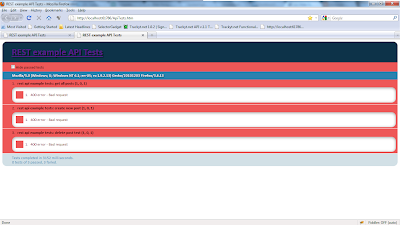

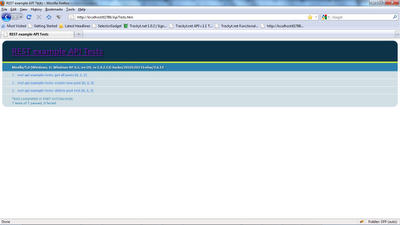

For all of these interface methods, I’m adding integration javascript tests in the same way I described here. Since we don’t have implementation all are failed now.

Please take a look on those tests before proceeding to implementation part, it would make some things more clear.

API folder structure

It is a question of choice but I just prefer to put all API related code to separate folder, called (who might guess?) “API”. It is really similar with Area, by it’s structure. It has Controllers, Models and Registration class. It does not have any Views, since API does not expose any UI.

Routing

If I were asked to describe what the ASP.net MVC2 application is about I would answer: “It is mapping between HTTP request, with particular URL, to corresponding method of handler class. This handler is called controller, method is called action”. So, the primary goal of MVC application is to define such mapping. In terms of MVC such mapping is called routing. It is all about the routing.

Let’s take a look on our interface one more time and we came up with such routing definition for API.

using System.Web.Mvc;

namespace Web.API.v1

{

public class ApiV1Registration : AreaRegistration

{

public override string AreaName

{

get { return "ApiV1"; }

}

public override void RegisterArea(AreaRegistrationContext context)

{

context.MapRoute(

"ApiV1_posts",

"api/v1/posts/{action}/{userName}/{postUrl}",

new { controller = "APIV1", postUrl = UrlParameter.Optional });

}

}

}

API Controller

After routing is defined, it is time to add actual hander - controller class. Initially it would be empty, with out any action. Just initialization of context object.

namespace Web.API.v1.Controllers

{

public class ApiV1Controller : Controller

{

private RestExampleDataContext _context = new RestExampleDataContext();

// actions..

}

}

API Actions implementation

We’ve complete infrastructure to start implementation. Solution, Project, Interface, Tests, Routing, Controller.. now it is time for Actions.

Get all posts method

http://localhost/api/v1/posts/all/{username}

It receives username as parameter and expected to return all blog posts belong to this user. Code is:

[HttpGet]

public JsonResult All(string userName)

{

var posts = _context.BlogPosts.Where(p => p.CreatedBy == userName);

return Json(

new { success = true, data = new { posts = posts.ToList() } }, JsonRequestBehavior.AllowGet

);

}

Signature of action method said: respond to HttpGet verb, get all records with corresponding userName and return as Json.

Json method of Controller class is really cool feature of MVC2 framework. It receives anonymous type object and serialize it Json. So, the new { success = true, data = new { posts = posts.ToList() } } object will be serialized into:

{"success":true,"data":{"posts":[{"Id":1,"Url":"my-post-1","Title":"My post 1","Body":"This is first post","CreatedDate":"\/Date(1293832800000)\/","CreatedBy":"alexander.beletsky","Timestamp":{"Length":8}},{"Id":2,"Url":"my-post-2","Title":"My post 2","Body":"This is second post","CreatedDate":"\/Date(1293919200000)\/","CreatedBy":"alexander.beletsky","Timestamp":{"Length":8}},{"Id":3,"Url":"my-post-2","Title":"My post 3","Body":"This is third post","CreatedDate":"\/Date(1294005600000)\/","CreatedBy":"alexander.beletsky","Timestamp":{"Length":8}}]}}

Nice and clean.

Take a note to JsonRequestBehavior.AllowGet. This is a special flag, you have to pass to Json method, if it is being called from GET handler method. This is done to prevent Json Hijacking type of attack. So, actually if your API call returns user sensitive data, you should consider POST instead of GET.

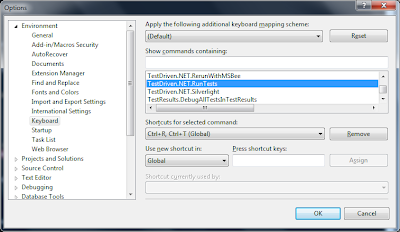

Let’s re-run test suite and see that first test is green now.

Create new post method

http://localhost/api/v1/posts/all/{username}

It receives username as parameter and blog post content in POST body as payload. Code is:

[HttpPost]

public JsonResult Post(string userName, PostDescriptorModel post)

{

var blogPost = new BlogPost {

CreatedBy = userName,

CreatedDate = DateTime.Now,

Title = post.Title,

Body = post.Body,

Url = CreatePostUrl(post.Title)

};

_context.BlogPosts.InsertOnSubmit(blogPost);

_context.SubmitChanges();

return Json(

new { success = true, url = blogPost.Url });

}

private string CreatePostUrl(string title)

{

var titleWithoutPunctuation = new string(title.Where(c => !char.IsPunctuation(c)).ToArray());

return titleWithoutPunctuation.ToLower().Trim().Replace(" ", "-");

}

where PostDescriptorModel is

namespace Web.API.v1.Models

{

public class PostDescriptorModel

{

public string Title { get; set; }

public string Body { get; set; }

}

}

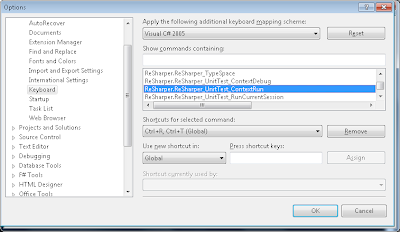

If you try to run this example just like that, you will see that PostDescriptorModel instance will be null. MVC2 could not handle Json payload. But if you google a little you find article by Phil Haack, where he addresses exactly the same issue - Sending JSON to an ASP.NET MVC Action Method Argument. Support of Json as action method is implemented in MVC Futures 2 library (library that contains useful extensions, that are not yet part of framework but will be there with big chances). Download it by this link add reference to project and in Global.asax.cs add JsonValueProviderFactory:

protected void Application_Start()

{

AreaRegistration.RegisterAllAreas();

RegisterRoutes(RouteTable.Routes);

ValueProviderFactories.Factories.Add(new JsonValueProviderFactory());

// ...

Important:

- In case you are using MVC3 framework, you do not need to include MVC Futures assembly, since

JsonValueProviderFactory is already included into MVC3.

If I try to re-run the tests, I’ll see that “create new post” test is still red. That’s because “get post” API method is still not implemented.

Get post by url method

http://localhost/api/v1/posts/get/{posturl}

It receives post as post url and return blog post object in response. Code is:

[HttpGet]

public JsonResult Get(string userName, string postUrl)

{

var blogPost = _context.BlogPosts.Where(p => p.CreatedBy == userName && p.Url == postUrl).SingleOrDefault();

return Json(

new { success = true, data = blogPost }, JsonRequestBehavior.AllowGet);

}

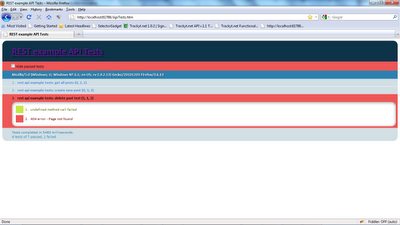

Last red test is “delete post test”, so let’s implement delete API call.

Delete post by url method

http://localhost/api/v1/posts/delete/{posturl}

It receives post as post url and return status in response. Code is:

[HttpDelete]

public JsonResult Delete(string userName, string postUrl)

{

var blogPost = _context.BlogPosts.Where(p => p.CreatedBy == userName && p.Url == postUrl).SingleOrDefault();

_context.BlogPosts.DeleteOnSubmit(blogPost);

_context.SubmitChanges();

return Json(

new { success = true, data = (string)null });

}

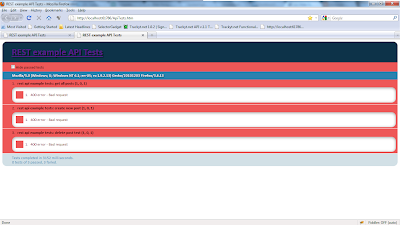

Now all tests are green. Fantastic!

Handle Json Errors

What happens if exception thrown with-in API method? Let’s create a test and see:

test("fail method test", function () {

var method = 'posts/fail';

var data = null;

var type = 'GET';

var params = ['alexander.beletsky'];

var call = createCallUrl(this.url, method, params);

api_test(call, type, data, function (result) {

ok(result.success == false, method + " expected to be failed");

same(result.message, "The method or operation is not implemented.");

});

});

And add implementation of failed method:

[HttpGet]

public JsonResult Fail()

{

throw new NotImplementedException();

}

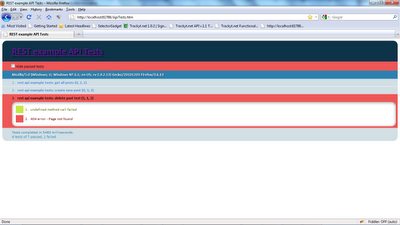

If I run the test, I’ll see such result:

This is not very greceful. It is expected that Json response would contain false in success attribute and message would contain actual exception message.

Of cause, it is possible to wrap all methods in try / catch code block and return corresponding Json in catch block, but this violates DRY (don’t repeat yourself) principle and makes code ugly. It is much more better to use MVC2 method attributes for that.

So, we define new attribute that would handle error and in case of exception thrown with-in Action method, this exception will be wrapped in Json object and returned as a response.

namespace Web.Infrastructure

{

public class HandleJsonError : ActionFilterAttribute

{

public override void OnActionExecuted(ActionExecutedContext filterContext)

{

if (filterContext.HttpContext.Request.IsAjaxRequest() && filterContext.Exception != null)

{

filterContext.HttpContext.Response.StatusCode = (int)System.Net.HttpStatusCode.InternalServerError;

filterContext.Result = new JsonResult()

{

JsonRequestBehavior = JsonRequestBehavior.AllowGet,

Data = new

{

success = false,

message = filterContext.Exception.Message,

}

};

filterContext.ExceptionHandled = true;

}

}

}

}

Add this attribute to method definition:

[HttpGet]

[HandleJsonError]

public JsonResult Fail()

{

throw new NotImplementedException();

}

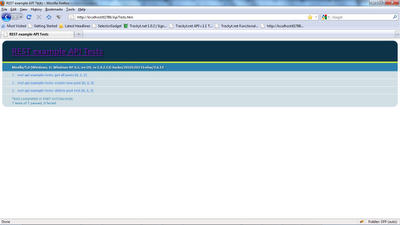

And I’m happy to see that all tests are passing now!

Since we need similar behavior for all API calls it is better to add this attribute to class, instead of method.

namespace Web.API.v1.Controllers

{

[HandleJsonError]

public class ApiV1Controller : Controller

{

// code..

Examples and code for reuse

All code is re-usable and available on my github repository - https://github.com/alexbeletsky/rest.mvc.example.